From my perspective… yes.

It pushes the industry forward.

On July 16th Blackmagic Design introduced the Ursa Mini Pro 12K cinema camera. The announcement seemed like a lofty direction given that competitors are slowly rolling out 4, 6, & 8K cameras. It definitely took me by surprise, but as I digested the information and played with the 12k Ursa footage, Blackmagic’s direction began to make more sense.

On the surface, a 12K camera seems a little excessive when you directly compare it to current camera offerings. But if anything… it challenges others to ramp up their camera innovations and take larger risks at better price points. It’s refreshing to see this large technology leap, rather than a small incremental change meant to protect a product line or quarterly profit margins.

Real innovation is difficult from year to year, but if a company can add value to a customer’s bottom line, the reward is usually a purchase. With this announcement, Blackmagic’s offers 14 stops of S35mm 12K raw editable on fairly modest gear. I’m sure some marketing and engineering teams have taken taken notice.

How the industry, DPs, and end users respond in time remains to be seen. It’s always challenging to make inroads into industries where folks might not see the need to change. We’ve been marketed in “K’s” and megapixels for so many years, but ultimately it’s the final footage that matters. My hope is that this camera offers a real alternative to higher priced REDs, ARRIs, and Canons.

My Initial Reaction

This thing will need gobs of storage along with an abundant amount of processing power to get reasonable performance. Raw 12K feels like overkill in a HD/4K world. Does packing that many photo sites in such a small space really make sense?

As I dove deeper into their technology, graded the raw clips, and learned more about their camera/codec technology, I began to understand the reasoning behind the design choices they made. After playing with several clips, I came to appreciate their achievement: a high dynamic range symmetrical 12K/8K/4K BRAW format we can edit on laptops.

Once you work with 12K imagery as a starting point, it’s hard to go back to undersampled Bayer 4K video. The bottom line for me, there is a crispness and tonal smoothness to the imagery that is a pleasure to work with, nice skin tones, and no over sharpened artifacts here. I’ve worked with raw workflows for 7 years now, BRAW makes it even easier with less storage overhead. While I hoped this announcement would introduce a global shutter 6K Full Frame sized sensor with killer AF, for practical purposes, S35mm with a manual lens still serves 96% of my needs for the foreseeable future. With this current sensor/codec technology, they lay a solid foundation for exciting hardware and software developments.

Blackmagic essentially developed a 12k spec that no one asked for. Why?

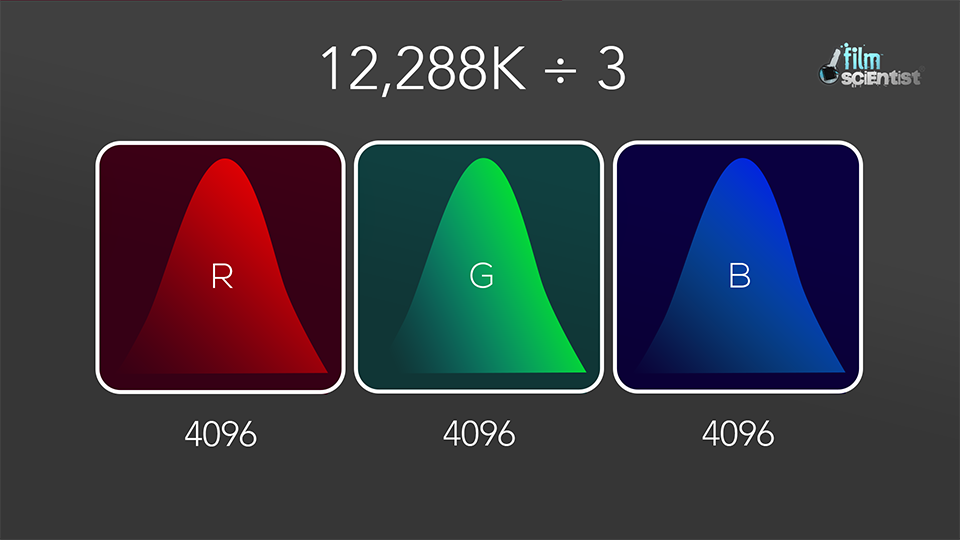

As we shift from HD to 4K as a deliverable of choice, my guess is Blackmagic was looking to create the best looking full RGB 4K. If you divide 12,288 by 3, you get 4096. Divide 6480 by 3, and you get 2160. I believe their goal was to develop a chip that is tuned to capture great color (…and dynamic range given those white photosites).

As filmmaker, I am always looking for archival mediums that stand the test of time. Film was “it” for a while. Now the data equivalent is raw sensor footage. While we’ve had “4K” cameras for a while, there are many different flavors that have defined it over time. In the film world, when digital intermediates were first introduced, it was based on scanning 4,096 red photosites, 4096 green photosites, and 4096 blue photosites to create an image 4096 pixels across. With the introduction of “4K” cameras in the mid 2000’s, the idea of what constituted 4K started to get watered down because of marketing. With URSA 12K, it looks like we’re closer to fully oversampled RGB 4K based on actual captured data.

From my perspective, Blackmagic is a company led by some bold engineering choices rather than marketing teams. While it’s easy to sell resolution K’s from a marketing standpoint so consumers can understand it, things like dynamic range, color, skin rendering, and gradeability (is that a word?) are harder to define in the real world with consensus.

79,626,240 photosites, but it’s all about the design.

12,288 x 6480 is a nice big number, but if you look deeper into this new sensor design it offers benefits to color and dynamic range.

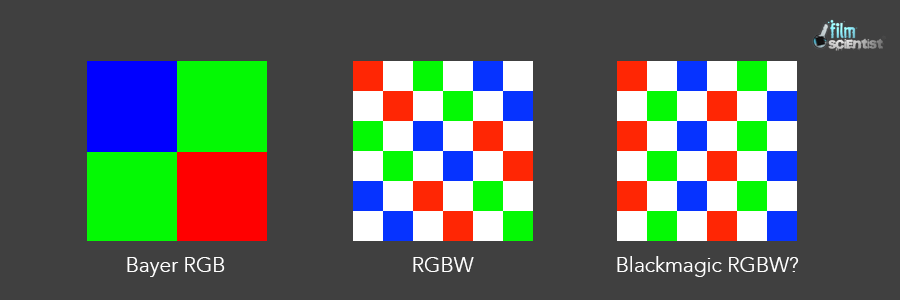

Blackmagic spent 3 years developing a custom chip, an alternative to the traditional Bayer colored filter array. With the addition of clear photosites to the colored filter array we take advantage of capturing true luminance data. That same information can be also be used to calculate more accurate color depending on how nearby pixels are combined. With this announcement, Blackmagic leads the way with a new type of RGBW CMOS sensor for cinema, at a resolution that gives still photo cameras a run for their money.

RGBW sensor technology has been used in smartphones the past several years to overcome their tiny sensor area. I’m sure you’ve all seen dramatic photo improvements on your iPhones and Samsungs lately. Now Blackmagic becomes one of the first to apply it to S35mm cinema for some nice imaging gains. With this novel amalgamation of sensor + codec + software processing, Blackmagic has made 12K a viable capture medium that seamlessly works together through their entire imaging chain.

Diving Deeper

As I dove deeper into their documents this past week, I got a glimpse of their innovative engineering and novel processing they’ve applied to Ursa’s 12K sensor. It takes advantage of collecting data from filtered and unfiltered photo sites to calculate an image with greater dynamic range.

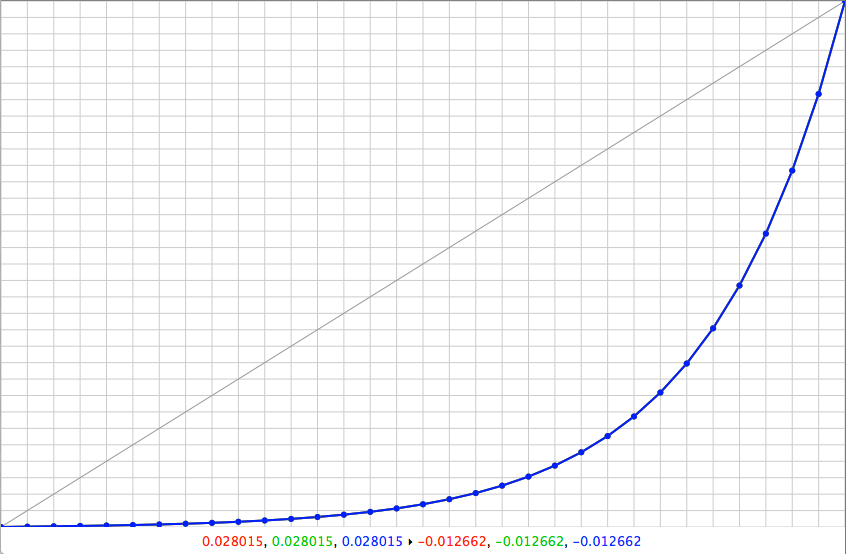

It captures red, green, blue, PLUS unfiltered white light. Because white light contains the full spectrum of all the other colors they can use math to combine this wideband data with the information captured from nearby filtered photosites to better calculate each combined pixel (allowing for a better signal to noise ratio). The differing sensitivities of the wideband and colored sites can also be combined & processed in new ways to essentially act as a dual gain device at the sensor level. And because the wells on the filtered RGB sites don’t saturate to the same level, they also act like low pass filtration. Benefits to dynamic range and color channels are possible because of the sheer number data points, and applying various vertical and horizontal processing algorithms further refines this data.

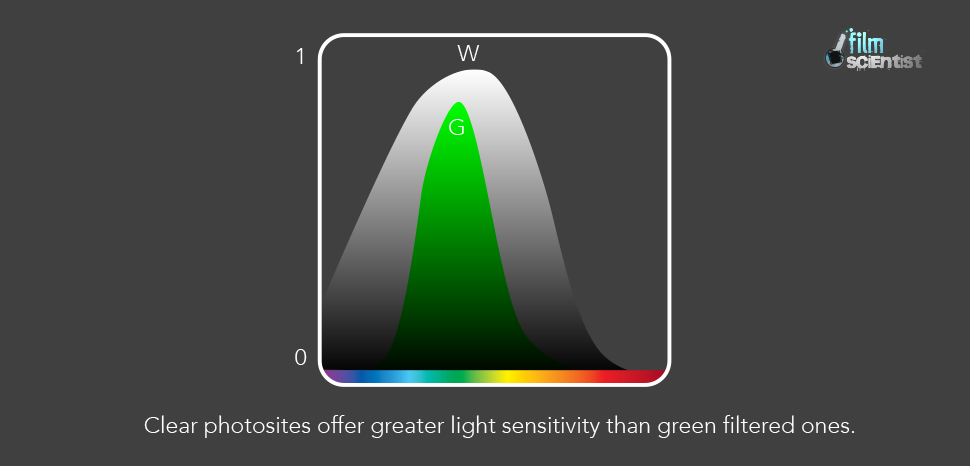

A white photosite is better at capturing luminance than a filtered green photo site because it is unfiltered. So while Bayer sensors derive their luminance from the combination of red, green, and blue; having a clear sensor allows the sensor to measure luminance directly, it’s not solely an interpolation from RGB filtered sites. So we’re essentially removing one more veil through the measurement of real data. As this technology matures, my hope is that we’ll start reaching even deeper into those blacks too. RGBW is the next evolution in sensors, so it stands to reason with time they’ll progress to even larger ones.

While packing so many photosites into a S35mm sensor seems counter-intuitive, it’s the combination of “w” photosites with BRAW working along side it that makes all the difference. It looks like they’ve spent a great deal of time developing and designing BRAW from the ground up to maximize the data captured by this sensor in very specific ways. Their unique array of clear photosites to capture direct luminance information allows for easy scaling to 8K & 4K. Previous generation Bayer Sensors consisted of RGB arrays that usually doubled the green photosite to derive luminance information and didn’t allow for raw scaling without cropping. With RGBW sensors, luminance is directly captured directly at the photosite level.

This is not a traditional Bayer sensor like RED, Canon, or Arri cameras. The large amount of silicon devoted to capturing luminance in this array works much like how our eyes work balancing our vision between rods and cones. My guess is that it makes a great black and white camera too.

Why do we need those extra photosites?

Beyond the obvious answer of better resolution, the extra luminance photosites allow us to extract more dynamic range and better color conversion when processing each pixel.

While there is an aspect of oversampling that happens because of the shear number of them, it’s genius is in it’s ability to use the extra unfiltered white channel to derive better color & dynamic range through the math based on this new sensor arrangement. Because white light contains a combination of all colors they can use the data gathered from those photosites to do math to calculate adjacent pixels. The BRAW processing can subtract red, blue, or green signals from white to arrive at different values. The is where BRAW works to extract the information in the most efficient way for use in their post imaging pipeline. Given the sheer amount of data captured, it also leaves room for future color science improvements as processing algorithms improve. Based on the patent documents online, it seems like this clear photo site works to dramatically improve light efficiency when calculating pixel luminance (contributing to overall dynamic range.) while also aiding in distinguishing / improving color during the de-mosaic process.

What does this mean relative to the final image?

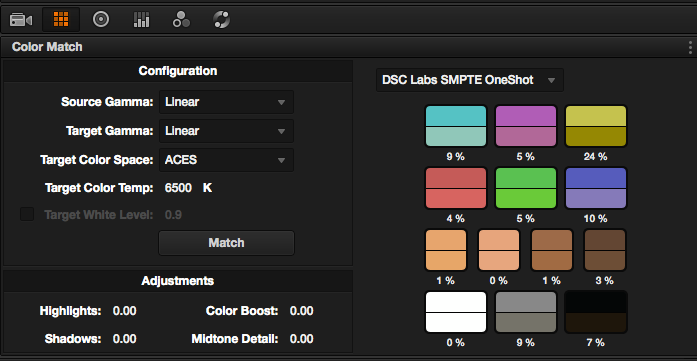

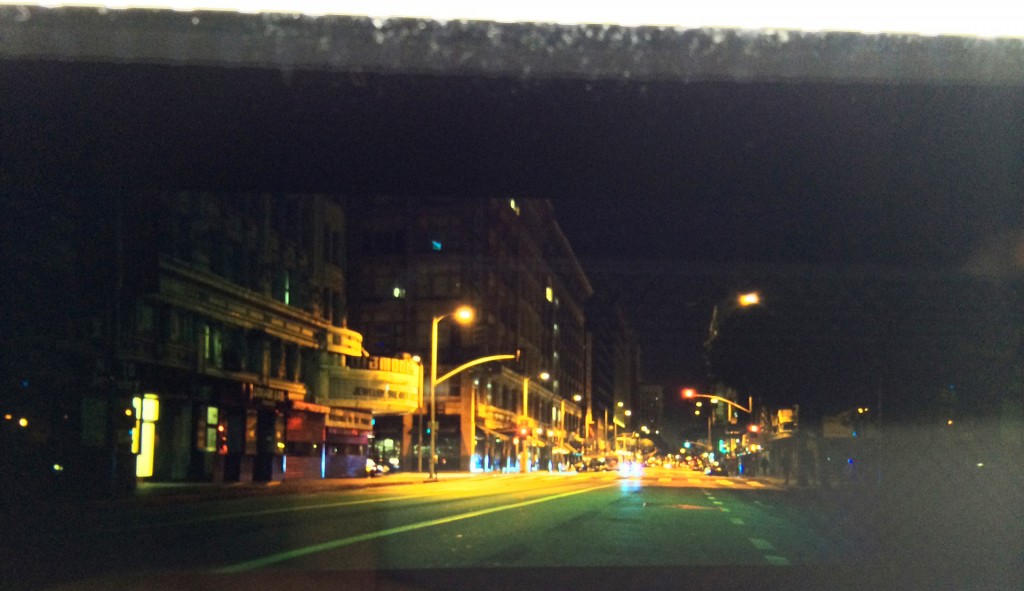

Based on the test clips Blackmagic / John Brawley shared, I feel like colors are more subtle and skin tones are more true to life. I’m excited at the clarity and color Blackmagic has achieved, along with their efficient use of the data captured.

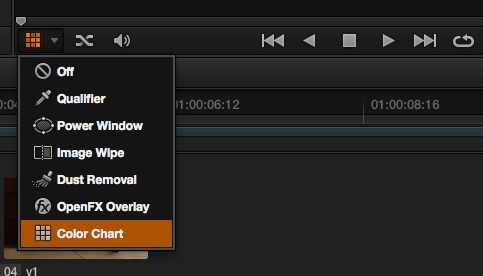

Here is downloadable test footage I graded on a 4K timeline using their native workflow…

Who is this camera for?

This camera seems targeted to folks looking for a more economical option to an Alexa or Venice. If your goal is oversampled full RGB 4K, serious VFX, or content for very large scale projection/LEDs, it might be good to consider this camera. Pulling a truly high resolution 80mp still from video is also a welcomed bonus. The ability to do a deep crop (while never a substitute for change in perspective) is also available should your lenses resolve the detail. Basically if you are looking to craft an exacting image with great color, less aliasing & moiré then this camera is for you. It might not hold the prestige levels of some other cameras just yet, but from what I can see from John Brawley’s footage, it delivers the goods.

Ultimately, this camera basically gives artists a wider canvas to create their work. Really can’t wait to see it in more hands of talented DPs. With so much flexibility in resolution, scaling, and frame rate I’m excited to see what’s possible. I’m also curious to test the moiré limits, and see how well one can key hair in challenging environments. Would also love to get better understanding of how the sensor’s real world MTF translates to lenses of various eras. It might be the ultimate cinema lens tester.

Limiting Factor: Lenses

Given it’s ability to resolve incoming light, this sensor will probably reveal lens characteristics in their full glory… good and bad. Deficiencies like chromatic aberration or edge softness will no doubt be magnified, so to maximize our results we’ll need to “up our lens game”. I have no doubt the putting an amazing lens in front it will yield an amazing results. The challenge will be finding a lens set up to the task of resolving that amount of data. While vintage glass might be good for certain looks, modern glass might be the best solution to fully resolve the capabilities of this sensor when you get to HDR finishing.

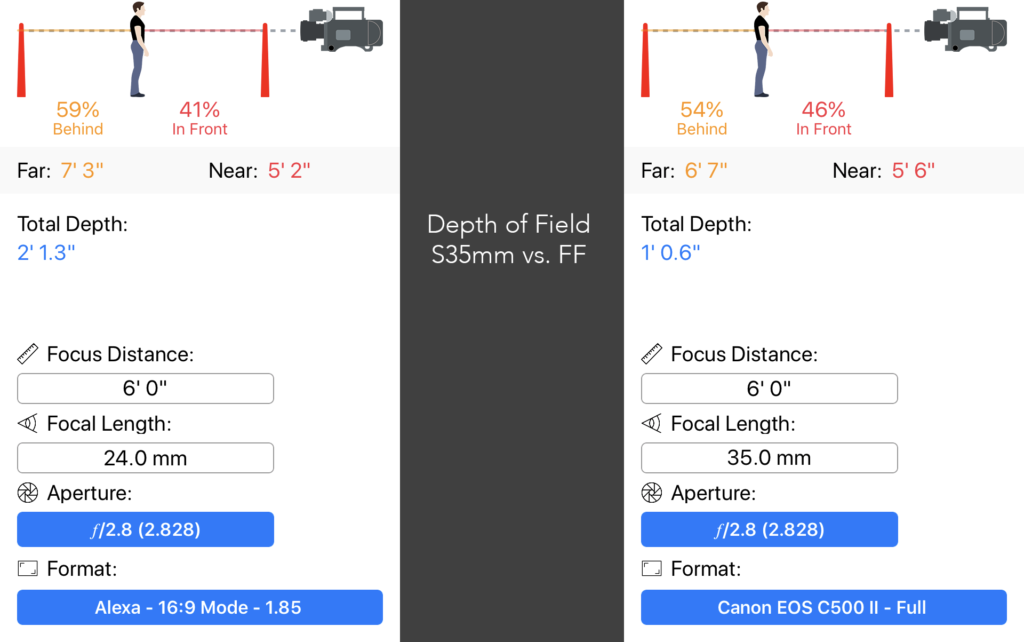

Why go with S35mm sensor size when other players are going Full Frame?

There is a reason the Super 35mm sensor has been the sweet spot for motion capture for so long. It provides a good mix of efficiency related to light, aperture, lenses, and depth of focus. Basically it balances the right amount of light capture with achievable focus. When your working with a crew lighting to T2.8 or T4 on S35mm, it’s much easier on your focus puller than achieving critical focus on Full Frame T2.8. The moment you go with a larger sensor size, focusing accuracy needs to increase dramatically because depth of field and lighting requirements change based on the longer lensing needed to match field of view. There is a smaller margin for focus error with Full Frame.

Given the current state of autofocus technology across the cinema industry, I’m okay that Blackmagic has foregone the current industry marketing push for “full frame” cinema. It seems like a good call for now. Given the camera’s current size, once kitted out, it’s more of a “cinema style” camera anyway, so solid focus pulling will remain critical for best results. While I hoped this announcement would introduce a global shutter 6K Full Frame sized sensor with killer AF, for practical purposes, S35mm 12k with a manual lens continues would serve 96% of my needs.

The bottom line

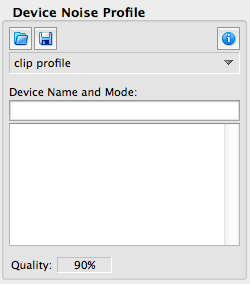

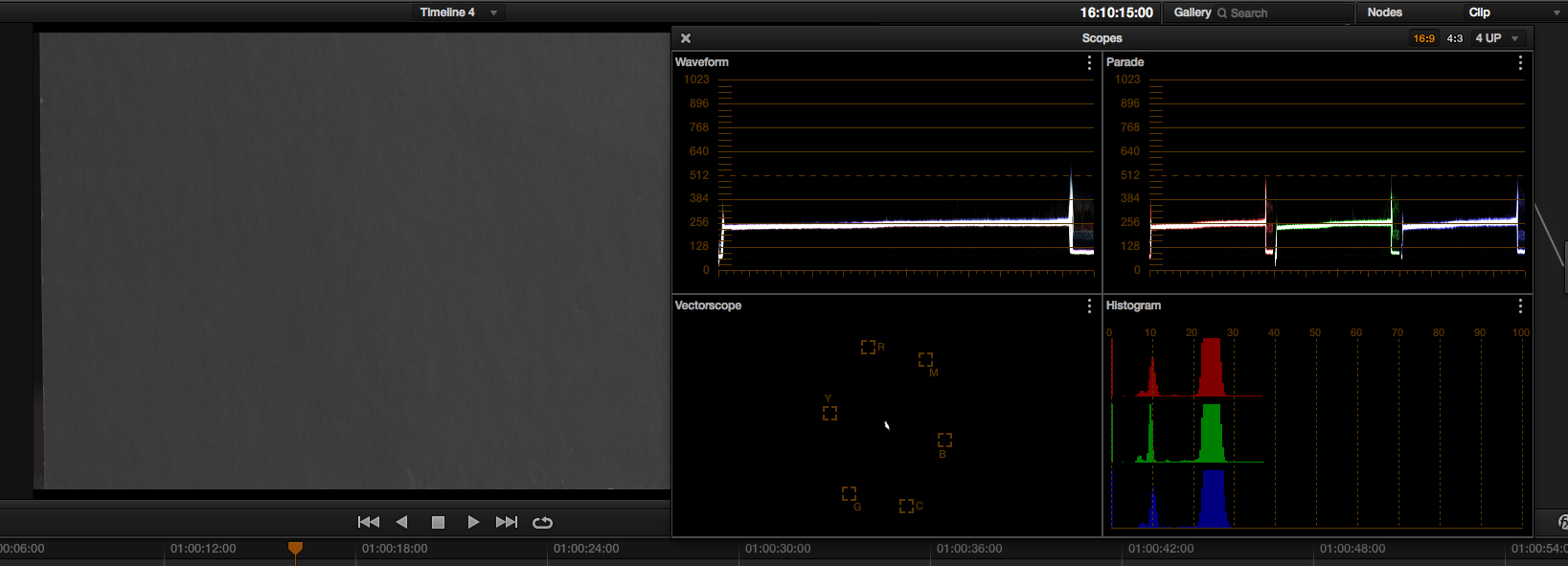

What it really comes down to is overall processing efficiency. 12K resolution doesn’t matter if you can’t actually work with it. I was able to edit and play back 12K sample clips onto a 4K timeline my old 2013 Mac Pro. While the machine chugged when I added Neat Video Noise Reduction node (as with most 4K footage), 12K playback was smooth if I turned off my noise reduction nodes.

The ability to use seven year old hardware to edit footage from the latest 12k camera speaks to Blackmagic’s engineering prowess and their ability to accomplish more with less hardware. I wouldn’t recommend such an old machine for daily professional use on 12k material, but it’s possible and usable with a few caveats. The same cannot be said for some of the 6k/8k camera technology coming down the line from other manufacturers.

Million Dollar Color Science / Color Grading Advantage

Blackmagic is in the unique situation because Davinci Resolve completes their high end image processing workflow. Blackmagic offers a full end to end solution, from camera, to codec, to editing, audio mixing and VFX. In terms of its design cohesiveness, I think the only comparable company offering a similar end to end philosophy is Apple.

The original DaVinci Resolve Grading Suite literally started off as a million dollar solution utilizing custom hardware available to very few post facilities. It transitioned to became a desktop solution that has changed the face of color grading. It’s one a piece of software that looks complex on the surface, but if you take the time to learn it, the program will reward you with the ability to craft amazing imagery.

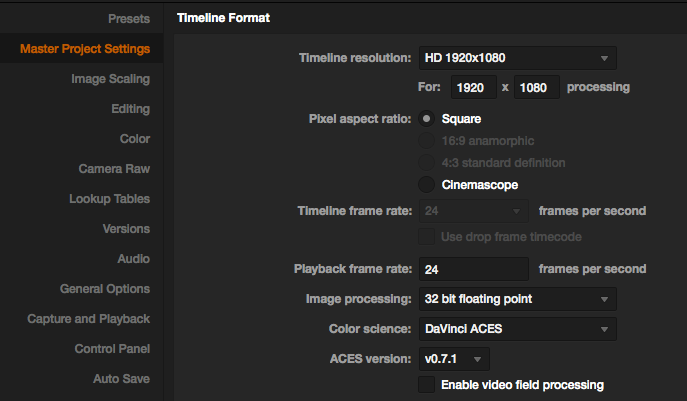

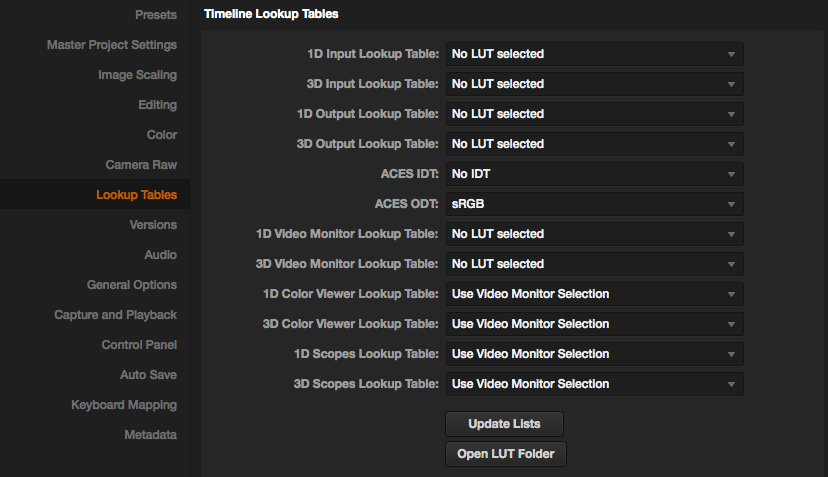

So if we step back to assess this new sensor technology with Blackmagic’s expertise in color science then we really start cooking with fire. Cameras of ten years ago had heavy duty DSPs for color science and custom chips for heavy encoding. With modern raw cinema cameras, this color processing has shifted to computers during post-production. This is where I believe Blackmagic gains an advantage with Resolve. They get to tailor their camera specifically to their non linear finishing tool to offer the best possible color science that evolves with the program.

If Blackmagic 12K truly offers comparable dynamic range and color science to the Arri standard at a budget price, we might start to see the playing fields begin to level with regards to price. RED gets credit for starting the 4K marketing wave back in 2008. Now in 2020, Blackmagic offers 12K with scalable 8K & 4K raw without cropping. I am excited to see continued testing with industry DPs once the camera becomes available.

Closing

I give kudos to Blackmagic for keeping this technology quiet for so long, no small feat. At a time when everyone has a cameraphone in their pocket & social media is just a click away, I understand the challenge of secrecy. It’s something that can only be achieved with a tight knit team working together to achieve the same goal. With many technology companies driven by marketing committees or shareholder desires, I applaud Blackmagic’s engineering vision to help the cinema industry move forward.

What I like about Blackmagic is that they deliver innovative technology to the masses at a viable price point while embracing open standards. The BRAW SDK is available to all, and non proprietary SSDs can be used for media capture.

I love seeing smaller companies truly innovate for their customer base, they’ve earned my loyalty as customer since 2013 with DaVinci Resolve and their 4K Production Cameras. Their products continue to provide great value through the years. I can’t wait to shoot with an Ursa 12K.

My Own Journey

Blackmagic Design has always been ahead of the curve in many ways. I moved from Avid to FCP in 2002, Premiere in 2010, and Resolve in 2013. Each transition in software came as a result of my need to achieve results the previous NLE software couldn’t tackle.

In 2013, the catalyst for my full shift to Resolve came in its ability to edit & grade raw and DPX timelines way before their competitors. In the end, I tired of competitor’s subscription models, and shifted to Resolve as a my full editing/grading/finishing solution & haven’t looked back.

The Resolve key that came with my first Blackmagic Camera still works today. Seven years later, my 4K Production Camera still delivers great footage and is still one of the few with global shutter. Today, I am marveled that I can edit 12k BRAW with Davinci Resolve 16 Studio on a somewhat dated, underpowered computer by current standards. How is that for value and engineering efficiency?

Reference: https://patents.google.com/patent/US20190306472A1